One of the things that I think still is tough in Facebook Ads’ platform is testing things. Considering what an otherwise-powerful platform it is, it still baffles me how many issues it has.

It used to be a little simpler to work around some of the weirdness in Facebook’s wrinkles, but that’s actually gotten a little harder. Luckily, they released their Split Testing feature.

There’s a lot it does right, but one major thing that I think is a fail.

This is a long post, so to if you’re TL;DR-ish, here are some places to hop to:

Creating a Split Test

Testing Delivery Optimization

Testing Audiences

Testing Placements

When I Use Split Testing

First, let’s just touch on why testing in FB is normally a little frustrating.

Creative Testing Is Hinky

As I mention in this post under the section called “Mixing Creative Types,” Facebook favors certain creative types. Related to this, even when you run all of the same ad unit type, it tends to pick a winner really fast – like after 500-1000 impressions fast!

The way we tend to get around this is to have different creatives in their own ad sets. So, you have Ad Set A with whatever targeting…you duplicate that and in Ad Set B just change the creative.

But then…

You’re Overlapping Your Audiences

Frequency is measured at an ad set level, so if you have multiple ad sets targeting the same people, you run the risk of beating them over the head with your ads. Usually the impact is minimal if your audience is big enough, but still, it is (or WAS) a risk. I say was, because…

Facebook Started Throttling

In addition to separate creative testing, different ad sets targeting the same audiences would also need to happen for other reasons: maybe you want to test bidding to Conversions vs. bidding to Clicks. Maybe you want to test two different audiences, even if they have some overlap in between them. You get the idea.

Obviously, this testing isn’t perfect. You are likely showing ads to people who exist in multiple ad sets, which means your frequency is higher than Facebook reports because it only shows you the frequency per ad set.

Facebook wizards got wise to the multiple ad set game, and obviously they don’t want you flooding the News Feeds of people with tons of ads. A few months ago, a rep told me they will start de-duping on the back end – I didn’t see it for awhile, but have recently begun to see this action.

Sounds great, but…

Basically, the algorithm will weight the impressions towards the better-performing ad set. So even if you’re running two ad sets with different creative, it will now pick a winner and favor that, just like it does with creative.

Argh.

Enter the Split Testing Feature

The Split Testing option helps solve for this – it allows you to specify what you want to test, and Facebook will serve things more equally for you, without overlapping audiences. I actually LOVE this.

But there’s one, huge, massive problem I have with it:

You cannot pick the winner and run it indefinitely. It’s a controlled test where you allocate a lifetime budget and choose an end date, and that’s it.

So, SO frustrating. You find a winner and then have to relaunch it as a new ad set, lose all that history, and as some of you well know, if the planets don’t align just right when you launch, it’s really hit or miss if you’ll replicate your results.

This is the biggest failing of the tool as it exists today. There are some others, mostly in the limitations of what you can test – I’ll note these as appropriate in the walkthrough below.

Otherwise, it is a tool that shows promise of being more helpful if they can add some features that address the limits it currently has.

How the Split Testing Feature Works

Enabling the Split Test

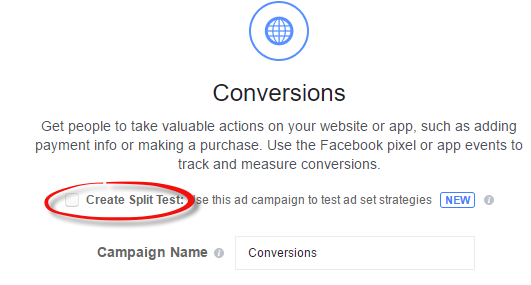

When you create a new Campaign, there’s a little checkbox that allows you to unlock the Split Testing feature:

Once you check that, you go to the your Ad Set creation screen and you’ll have an extra section for your testing:

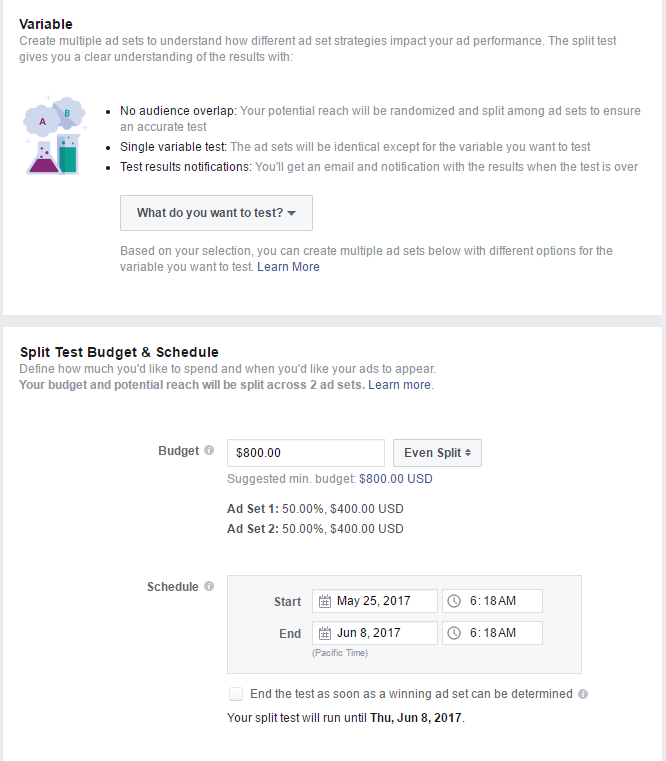

You have the option for three kinds of testing, currently: Audience, Placement, and Delivery Optimization (i.e., different types of optimization for the algorithm to go after).

And there is that godforsaken area to specify an end date and budget. Rawr.

You can test up to three Ad Sets where you change the variable you’re wanting to test.

Setting Up Your Test

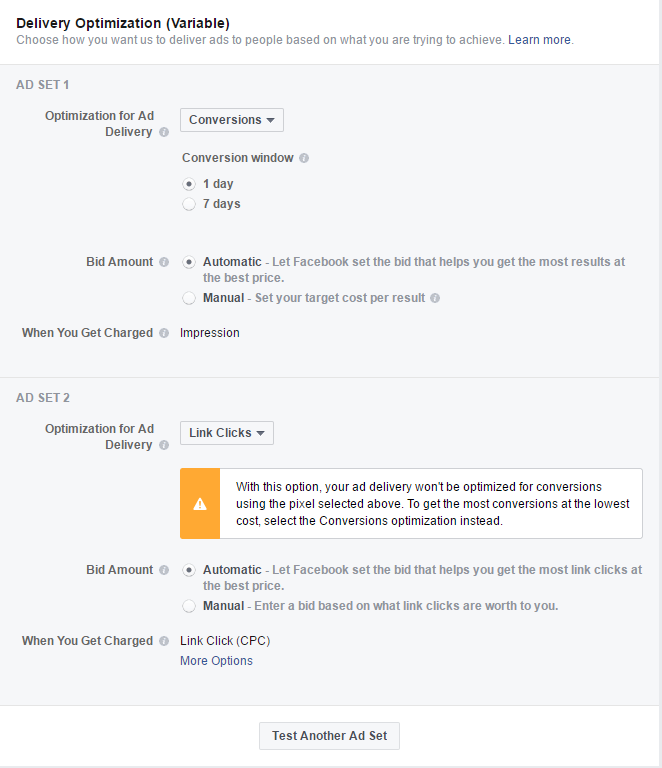

Delivery Optimization

Limitation:

There’s a big limitation here I wish they’d fix. Usually you set the event that you want the algorithm to optimize to ad the Ad Set level.

However, in the split test environment, it’s set at the Split Test level…this means the variable you test can’t be the event you have the algorithm optimizing to. It’s a little annoying to me, because let’s say you have a situation where there are microconversions that have more data than the end conversion. For example, maybe you run a hotel booking or airbnb-type enterprise, and people can request dates, but it doesn’t always mean they’ll result in a booking. You may want to test optimizing to an event around a Booking Submission because there are more of those than Bookings, ultimately.

It would be nice to easily see if the CPA does better optimizing to that vs. a secured Booking, right?

Well, too bad for you!

So, yeah, disappointment on that front.

The Good:

You can test some pretty big things like optimizing for Link Clicks, Impressions or Reach vs. Conversions. You can also test having it optimize to a 1 day conversion window vs. a 7 day one. So the stuff it offers IS valuable, I just with it had more. Because I’m a greedy data-eater.

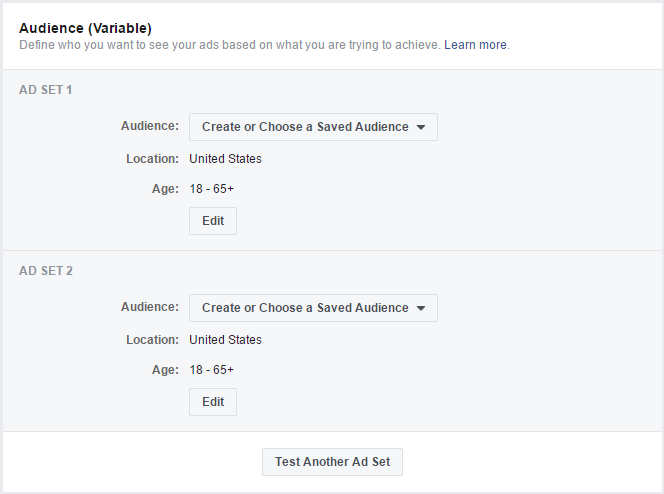

Audiences

Limitation:

In a regular Ad Set, there is a checkbox for “expanding interests.”

A lot of folks wonder how the users who fall into this category compare to those NOT in it – but you can’t test that. The option isn’t there when you set up audiences for a Split Test.

The Good:

The rest of it works like you think it should. You can run two audiences, which yes, you can do in a regular ad set setup, but it ensures that users who may fall into different groups don’t do so for the purpose of testing. It keeps the sets of users unique between the ad sets.

Placement

Limitation:

There really aren’t any with this one that I can easily identify – it’s straightforward and works like you’d think it would.

The Good:

People tend to have very strong stances on whether they separate their ad sets by device type. Some always do, and others have been performance do better when they combine them and let Facebook’s algorithm work its magic. Facebook reps will almost always recommend the latter.

This split testing option lets you see which way might be best for your account and its situation.

When I Use the Split Testing Feature

Normally I will use this to test a theory, and/or help me do a quick test to determine while direction to take an existing Ad Set. Because you cannot run this ongoing, nor can you start it again once it ends (!!!!!!!!!!!!!!!), it isn’t something I can rely on to do heavy lifting for the account long-term.

For new accounts, especially if they have a longer sales cycle, the 1 vs. 7 day conversion test can be extremely helpful.

I’ve also used it to get a sense of whether getting more specific with an audience will help or hurt me. For example, maybe you have a 1% lookalike audience that’s doing great, but you want to add a layer for homeowners. You know your cost will probably go up in getting more specific, but it might be offset by a better conversion rate. You can do a small, concentrated test to get some directional data to influence your longer-term strategy.

How It Reports

The reporting looks identical to if you’d just run two Ad Sets in the same campaign. You can add or remove all columns as usual.

Here’s an example of Mobile (first line) vs. Desktop (second line):

So there you have it in a ~1400 word nutshell. Hope springs eternal that they will continue to update the tool and its capabilities!

Thanks for this one Susan.

I’ve just started using the Split Test feature earlier this month, so I wasn’t aware of a lot of the limitations you’ve outlined.

But, baby steps—all good progress if it continues to go in this direction!

Cheers, James